Motivation

Web data record extraction is a problem of extracting repeated sets of semantically related elements from web pages. Effective evaluation of web data record extraction is hindered by static benchmarks and opaque scoring, preventing fair comparisons between traditional heuristics and zero-shot LLM methods in multiple domains.

Method

1. Dataset Construction

- MHTML (MIME + HTML) snapshots to ensure all content is available in the file

- Diverse domains including e‑commerce, news, travel, etc.

- Semi-automated annotation: Web pages are first annotated by LLM then refined by humans.

2. Preprocessing for LLMs

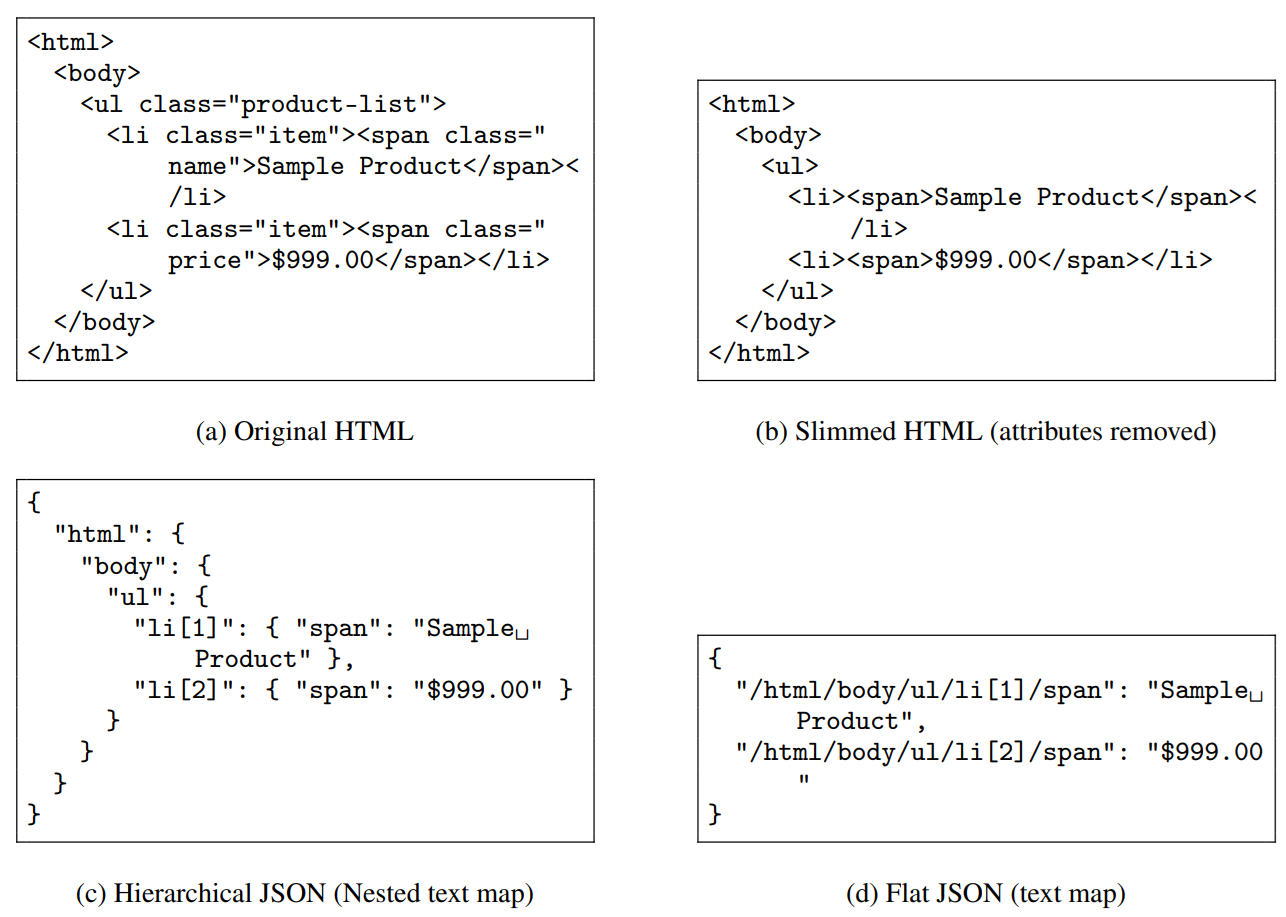

- Full HTML (~861K tokens): Cannot be used as it is too large for LLM (Gemini 2.5) input.

- Slimmed HTML (~86K tokens): Remove unneeded attributes such as id, class.

- Hierarchical JSON (~34K tokens): JSON version of the webpage.

- Flat JSON (~117K tokens): Direct XPath-to-text mapping.

3. Evaluation Metrics

- Overlap-based F1 through Jaccard similarity between predictions and ground truth, with optimal matching via Hungarian algorithm.

- Hallucination rate: a predicted record is hallucinated if all its XPaths fail to match any ground-truth XPath.

📈 Results

| Method | Input Format | Precision | Recall | F1 | Hallucination Rate |

|---|---|---|---|---|---|

| MDR | HTML | 0.0746 | 0.1593 | 0.0830 | 0.0000 |

| Gemini 2.5 | Slimmed HTML | 0.1217 | 0.0969 | 0.1014 | 0.9146 |

| Gemini 2.5 | Hierarchical JSON | 0.4932 | 0.3802 | 0.4058 | 0.5976 |

| Gemini 2.5 | Flat JSON | 0.9939 | 0.9392 | 0.9567 | 0.0305 |

The Flat JSON format dramatically boosts LLM performance with near-zero hallucination, outperforming both traditional heuristics and other input formats.

🧠 Contributions

- Dataset framework for creating evaluation datasets from MHTML snapshots.

- Structure-aware metrics to compare heuristic algorithms with LLMs.

- Preprocessing strategies that optimize LLM input.

- Public semi-synthetic dataset by modifying content from original web pages.

👀 Why It Matters

This work provides a standardized, transparent benchmark for comparing extraction approaches. It reveals how input representation critically influences LLM performance and minimization of hallucination.

❓ Remaining Questions

- Even with 12278 records, a dataset of 164 web pages seems very small. Can we calculate a 95% confidence interval and show it is small enough to rank the pre-processing strategies?

- What is the distribution of the 164 web pages? The authors give domain counts but omit page-length and record-count histograms. How does the per-slice performance differ for each slice of the dataset?

- How can we detect and resolve hallucinations? Can they be caught easily and “fixed” when we don’t have ground truth?

- Prompt engineering: Could better instructions narrow the gap, especially for Slimmed-HTML?